- AI Models

- July 17, 2023

NVIDIA Megatron-Turing: Revolutionizing Natural Language Generation

NVIDIA NeMo Megatron is an end-to-end framework incorporating breakthrough techniques from NVIDIA Research, resulting in over 30% faster training times for GPT-3 models. It offers comprehensive support, from data curation and training to inference and evaluation, making it accessible for industries adopting Large Language Models (LLMs) for AI-powered services.

Not merely limited to accelerating training times, NVIDIA NeMo Megatron is designed to offer holistic support to businesses and industries. From data curation and training to inference and evaluation, every step in the lifecycle of AI-powered services has been meticulously thought through and optimized. This comprehensive approach makes the framework exceptionally accessible and valuable to industries looking to adopt Large Language Models (LLMs) in their service portfolio.

If you're seeking a powerful tool that combines speed, efficiency, and ease of use for AI-driven language model training, then NVIDIA NeMo Megatron is a solution that commands attention. Be prepared to explore its game-changing capabilities in this blog post. Join us as we delve deeper into the world of LLMs and understand how NVIDIA is revolutionizing the realm of natural language generation.

Technical Details

LLMs can learn from text and can contain trillions of parameters. The NeMo Megatron framework introduces new updates, including a hyper-parameter tool for optimized training and deployment. These advancements enhance the accessibility of LLMs for industries leveraging them in various domains.

NVIDIA Megatron Framework

NVIDIA Megatron is a PyTorch-based framework designed for training giant language models based on transformer architecture. With 530 billion parameters, MT-NLG demonstrates unmatched accuracy across various natural language tasks. It enables the training of language models with up to 1 trillion parameters and facilitates tasks like email phrase completion, document summarization, and live sports commentary. The University of Florida has utilized the Megatron framework to develop GatorTron, the world's largest clinical language model.

Capabilities

LLMs and related technologies can be categorized into five main areas:

- Open-source LLMs Several open-source LLMs, such as BLOOM, are available. However, hosting and processor costs, latency, and regional availability challenges may arise. Alternatively, platforms like Hugging Face's inference API can be used cheaply.

- Customized LLMs NVIDIA NeMo Megatron enables organizations to create fully customized and industry-specific LLMs. This empowers enterprises to offer tailored GPT-3 models as a service, distinguishing themselves in the market.

- Hosted Commercial LLMs Commercial offerings like OpenAI and AI21Labs have gained significant attention. These platforms provide hosted solutions for LLMs and offer services catering to various applications, including chatbot implementation and document summarization.

- No-Code/Low-Code Customization LLM providers must offer easy access and adoption through playgrounds, simplified no-code to low-code fine-tuning, and customization with industry-specific data to stand out in the market. Accurate semantic search, visual clustering, cost-effectiveness, and strategic market alliances are additional factors for differentiation.

- Competition from Smaller Companies Smaller companies leveraging open-sourced models for services, hosting, and development pose a threat to commercial LLM providers. Companies must continually innovate and differentiate their offerings to maintain a competitive edge.

Limitations

Despite their impressive capabilities, LLMs have certain restrictions:

- Hosting and Processor Costs Considerations must be made regarding the expenses associated with hosting and processors when utilizing LLMs.

- Latency Challenges When regional and geographically dispersed installations are unavailable, latency can become an issue for LLM deployments.

- Differentiation and Monetization LLM providers must differentiate by offering unique features, specialized services, and strategic partnerships to monetize their offerings effectively.

Use Cases

LLMs find applications in various domains, including:

- Completion Prediction LLMs excel in contextually completing sentences or paragraphs, enhancing text generation capabilities.

- Chatbots LLMs exhibit exceptional performance in building highly contextual chatbots, enabling natural and engaging conversations.

- Natural Language Tasks LLMs demonstrate superior accuracy in completion prediction, reading comprehension, commonsense reasoning, natural language inferences, and word sense disambiguation.

- Downstream Applications Large-scale language models contribute to summarization, automatic dialogue generation, translation, semantic search, and code auto-completion.

Highlights

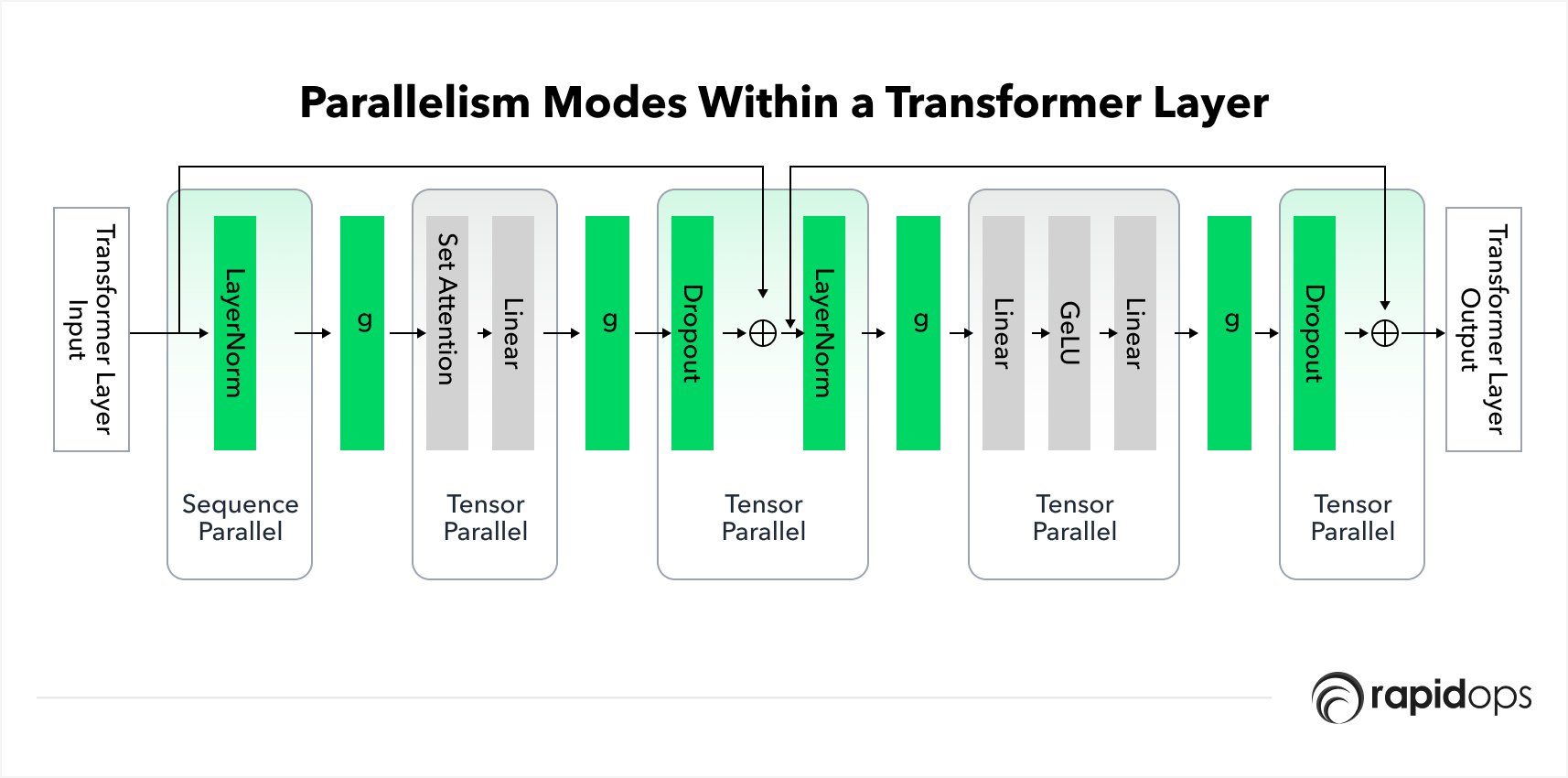

- Linear scalability for training models up to 1 trillion parameters using DGX SuperPOD, incorporating advanced optimizations and parallelization algorithms.

- Utilizes cuBLAS, NCCL, NVLINK, and InfiniBand technologies for training language models on multi-GPU, multi-node systems.

- Achieves significant throughput improvements, with more than 100x improvement when scaling from a 1 billion parameter model on 32 A100 GPUs to a 1 trillion parameter model on 3072 A100 GPUs.

- Achieves sustained 50% utilization of Tensor Cores, enhancing performance.

Conclusion

The collaboration between NVIDIA Megatron-LM and Microsoft DeepSpeed has created the Megatron-Turing Natural Language Generation model (MT-NLG), the most significant and potent monolithic transformer language model. The advancements in large-scale language models, supported by efficient and scalable software design, open new possibilities for natural language processing applications and drive progress in AI research and development.

Frequently Asked Questions

How does Megatron work?

Why is Megatron best?

How many parameters does Megatron have?

Is Megatron open-source?