- AI

- 10 min read

- January 2025

Everything Businesses Must Know About Explainable AI

Would you trust an AI-driven decision if you couldn’t explain how it was made? In today’s business environment, where transparency and accountability are essential, can you afford to rely on a system that operates like a ‘black box’?

From approving loans to diagnosing patients and detecting fraud, AI makes high-stakes decisions across industries. However, without transparency, these AI systems could quickly turn from a strategic asset into a serious liability.

As regulatory scrutiny intensifies, businesses must ensure they can explain and justify AI-driven decisions to avoid potential lawsuits, fines, and reputational damage.

Explainable AI (XAI) is no longer a luxury; it’s a necessity. Leading organizations in manufacturing, logistics, and retail sectors are embedding XAI into their strategies to mitigate risk, build trust, and comply with growing regulatory demands.

This article explores why XAI is crucial, the risks of ignoring it, and how you can implement it to safeguard and grow your business.

What is explainable AI?

As AI takes on high-stakes decision-making, one critical challenge emerges.

Can business leaders trust AI-driven outcomes if they don’t understand how they were made?

Traditional AI operates as a black box, delivering recommendations without transparency into its logic.

This lack of visibility creates operational, financial, and regulatory risks, especially in industries where AI influences pricing, risk assessment, and logistics planning.

For enterprises scaling AI, explainability isn’t just about ticking a compliance box; it’s a competitive edge. Without it, businesses risk more than regulatory penalties; they face financial losses, eroded stakeholder trust, and decisions they can’t defend. In a world driven by data, transparency isn’t optional; it’s the foundation of lasting success.

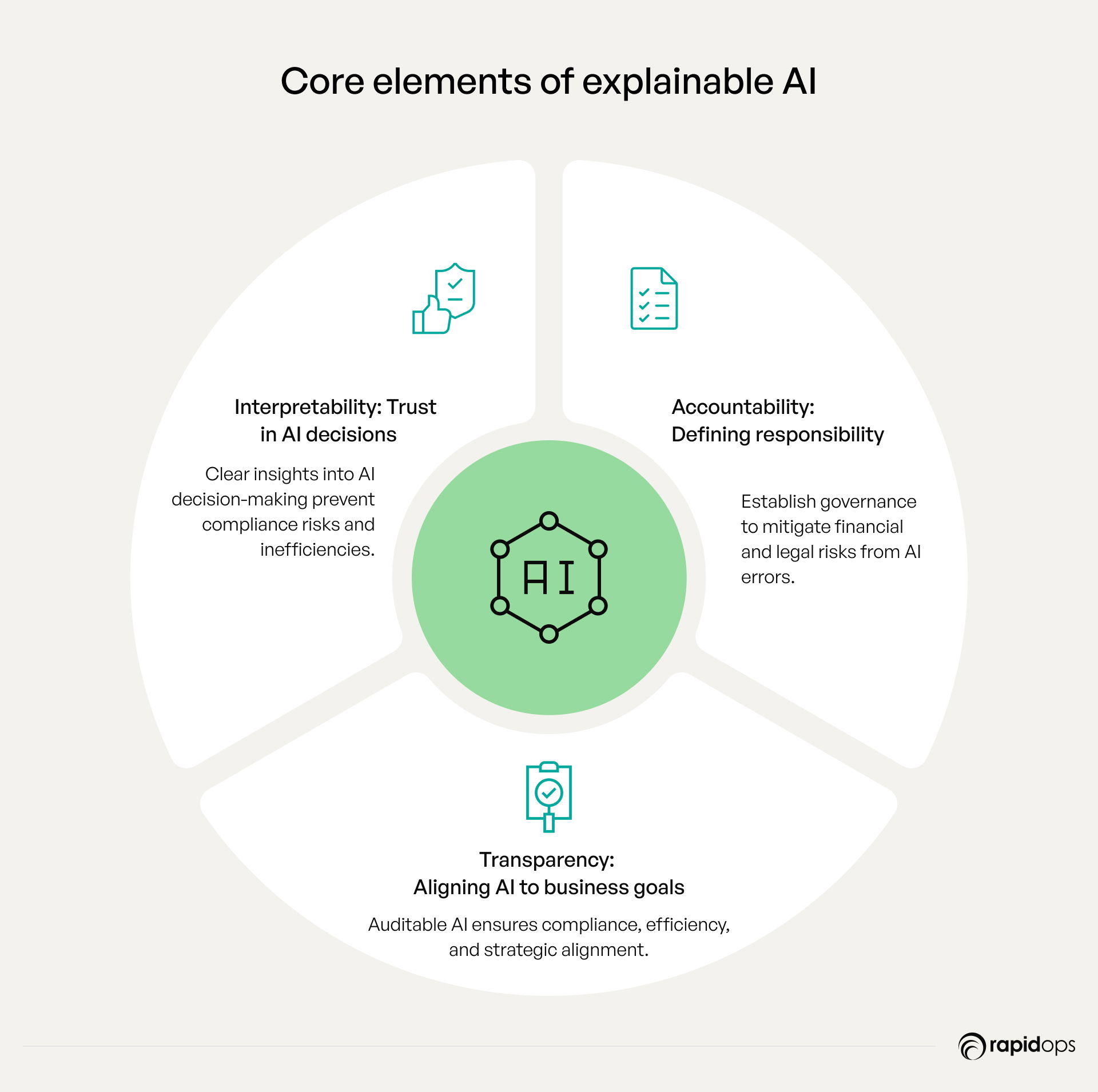

Key components of explainable AI

Explainable AI makes complex models understandable. It shows not just what decisions are made, but why. Here are the core components that make AI transparent and trustworthy.

1. Interpretability: Driving AI-backed business decisions with confidence

Leaders cannot rely on AI if they don’t understand how it reaches conclusions. A retailer deploying AI-powered dynamic pricing needs visibility into how AI sets price points to avoid alienating customers or triggering compliance issues.

Manufacturers using AI for predictive maintenance need to know why an algorithm predicts equipment failure; otherwise, they risk unnecessary downtime or missed failures. Without interpretability, AI adoption stalls, and trust erodes.

2. Accountability: Who owns AI-driven decisions?

As AI-driven automation scales, business leaders must establish clear accountability. If an AI system denies a loan, misclassifies defective products, or optimizes logistics routes inefficiently, who is responsible?

Without governance frameworks, AI failures can lead to financial penalties, reputational damage, and legal action. Organizations that proactively embed human oversight and governance structures ensure that AI aligns with strategic priorities rather than introducing unintended risks.

3. Transparency: Turning AI from a risk into a business enabler

In retail, logistics, and manufacturing, AI shapes critical decisions, from pricing strategies to supply chain optimization and predictive maintenance. Without transparency, businesses face compliance risks, inefficiencies, and operational blind spots.

Retailers must validate AI-driven pricing, logistics firms need explainable route optimization, and manufacturers require auditable predictive maintenance.

Transparency ensures AI-driven decisions are traceable, justifiable, and aligned with regulatory and business objectives.

Why explainable AI is crucial for business success

AI is reshaping industries, but without explainability, it can undermine trust, invite regulatory challenges, and expose businesses to unexpected risks. Forward-thinking leaders understand that transparency isn’t just a protective measure; it’s the key to fully harnessing AI’s power while maintaining control.

Building trust and accountability

AI systems must operate with the same transparency and reliability as human decision-making. Without explainability, stakeholders, including customers, regulators, and executives, struggle to trust AI-driven outcomes.

Businesses that invest in interpretable AI models will gain a competitive edge by fostering confidence in their AI-driven operations.

Compliance and regulatory readiness

Regulatory bodies are tightening AI oversight across industries. Retailers leveraging AI for dynamic pricing, manufacturers optimizing production, and logistics firms automating supply chains all face increasing compliance pressures.

Explainability ensures AI models remain auditable, fair, and legally defensible, helping organizations mitigate regulatory risks before they escalate.

Risk management and operational resilience

AI failures can disrupt business continuity; misleading demand forecasts, biased hiring algorithms, or flawed credit assessments can cost millions.

Explainable AI enables companies to identify, diagnose, and correct AI errors in real-time, preventing unintended consequences that could erode revenue and market trust.

Strategic decision-making and competitive differentiation

AI should drive growth, not guesswork. Leaders need AI solutions that don’t just generate results, but explain them. Transparent AI helps businesses make smarter, faster decisions with confidence.

Organizations that embed explainability into AI governance will be better positioned to make faster, smarter, and more accountable strategic decisions, turning AI from a technological asset into a business differentiator.

AI’s true value lies in control, confidence and credibility

The more explainable an AI system is, the greater control leaders have over its impact.

Companies that treat AI transparency as a core business priority will build trust, mitigate risks, and unlock AI’s full potential, ensuring AI doesn’t just enhance efficiency but also strengthens long-term business resilience.

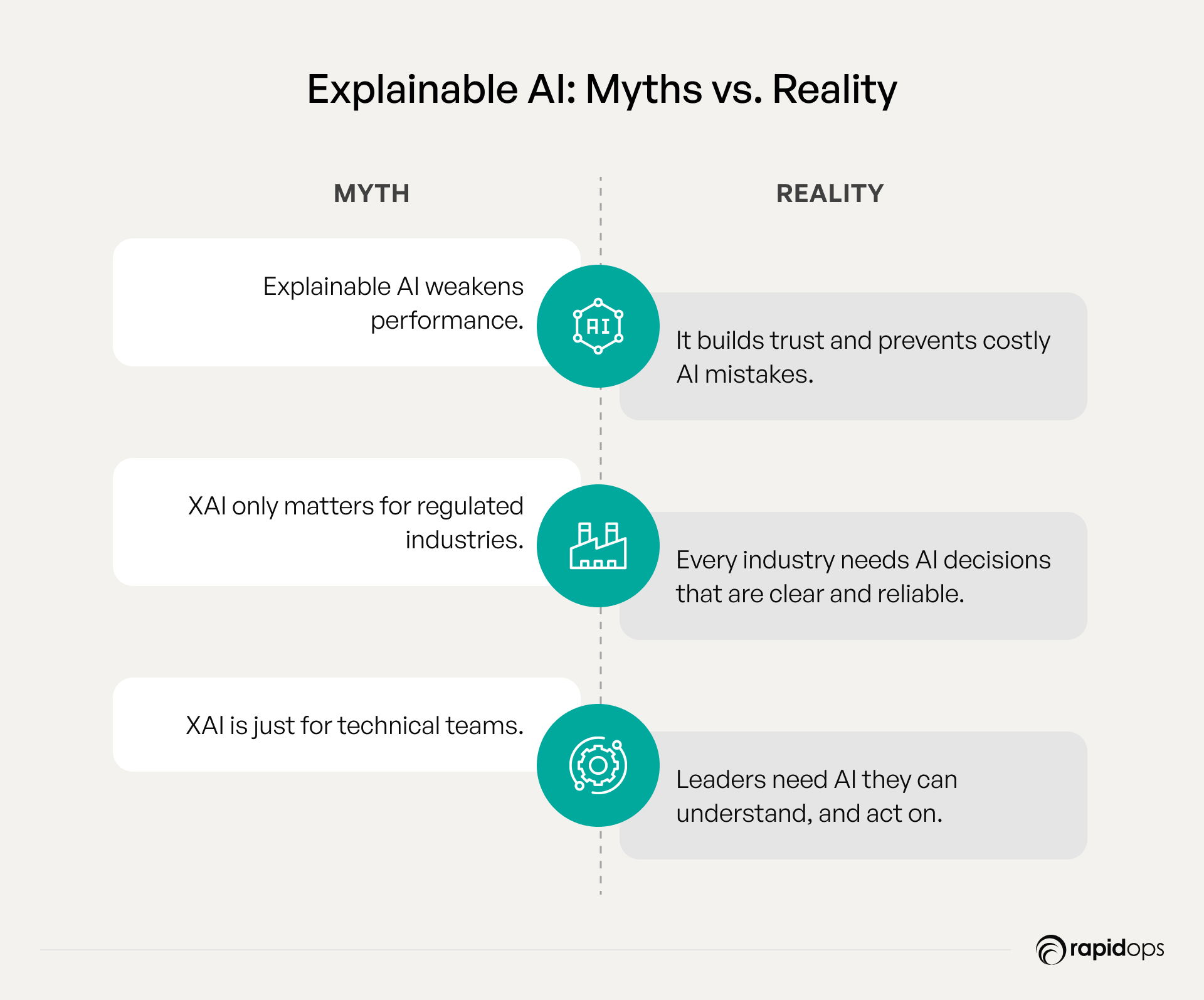

Common misconceptions about explainable AI

Despite its importance, explainable AI (XAI) is often misunderstood, leading to underuse and increased business risks. Clearing these misconceptions is crucial to maximizing AI’s potential.

Myth 1: Explainable AI is weaker AI

Reality: This is a common misconception. In fact, businesses using transparent AI see improved decision-making accuracy, reduced costly errors, and gained regulatory approval more quickly.

Rather than weakening AI, explainability enhances its value by enabling businesses to trust AI’s outputs, making it easier to understand and act on predictions. XAI empowers decision-makers to integrate AI into strategies with confidence, ensuring operational efficiency and risk reduction.

Myth 2: XAI is only relevant for regulated industries

Reality: While regulated industries like finance and healthcare are early adopters of XAI, businesses across all sectors, including retail and manufacturing, can unlock significant benefits. In retail, for example, XAI helps optimize pricing models transparently, ensuring customer trust and compliance.

In manufacturing, it enhances predictive maintenance, minimizing downtime by explaining why certain equipment needs attention. XAI helps maintain transparency, reduce risks, and drive more efficient decision-making, no matter your industry.

Myth 3: XAI is only relevant for technical teams

Reality: XAI is not just for engineers and data scientists, it’s crucial for business leaders who need to make informed decisions. By providing clear, understandable insights into AI models, XAI empowers leaders to align strategies with real-world insights and mitigate risks.

For example, a leader in logistics can rely on XAI to understand and optimize route planning decisions, improving efficiency and customer satisfaction. It gives business stakeholders the tools to use AI responsibly and strategically.

Real-world examples of explainable AI in action

As businesses explore AI, integrating Explainable AI (XAI) becomes more than a technical necessity. It’s a strategic business advantage. Here’s how companies in retail and manufacturing have successfully implemented XAI, turning it into actionable value.

Retail: Dynamic pricing with transparent AI

A leading eCommerce retailer faced challenges with inconsistent pricing strategies, which impacted customer trust and regulatory compliance. To solve this, they partnered with Rapidops to implement AI-driven dynamic pricing while ensuring the AI’s decisions were transparent and easily understood.

Rapidops deployed an XAI framework that offered clear reasoning behind every price adjustment. This transparency helped the retailer optimize pricing strategies, maintain customer trust, and comply with industry regulations. As a result, the company saw improved customer loyalty, competitive pricing, and minimized legal risk.

Manufacturing: Predictive maintenance with explainable AI

A manufacturing company struggled with frequent equipment downtime and inefficient maintenance schedules, which led to costly delays. They sought to optimize their operations with AI-powered predictive maintenance but needed transparency to act confidently on AI’s recommendations.

Working with Rapidops, the company integrated an explainable AI system that clarified why specific equipment was flagged for maintenance. This transparency allowed for data-driven decisions, reducing downtime, optimizing maintenance schedules, and extending equipment life, leading to significant cost savings and enhanced operational efficiency.

Why this matters to you

Explainable AI is not just about transparency; it’s about building trust, mitigating risks, and empowering businesses to make informed, confident decisions. By embracing XAI, you ensure compliance, reduce uncertainty, and unlock AI’s full potential to drive sustainable growth. Whether you’re in retail, manufacturing, or another industry, XAI is a key enabler of long-term success and resilience in a rapidly evolving landscape.

Challenges of implementing explainable AI

As AI adoption accelerates, organizations must navigate key challenges in making AI systems both powerful and transparent. Addressing these barriers is critical to unlocking AI’s full potential while maintaining trust and control.

Balancing accuracy and interpretability

High-performing AI models often prioritize accuracy over transparency, creating a trade-off between complexity and explainability. Businesses must integrate techniques like interpretable machine learning and model-agnostic methods to maintain both precision and clarity without compromising AI’s effectiveness.

Scalability issues

Deploying Explainable AI across enterprise-wide systems presents significant challenges. As AI models grow in complexity, ensuring consistent explainability across diverse applications ranging from customer interactions to supply chain management requires robust frameworks, scalable governance models, and advanced tooling.

Cultural and organizational resistance

AI transparency demands a shift in mindset. Resistance often stems from fear of exposing biases, reluctance to adopt new workflows, or concerns over accountability. Leaders must foster an AI-first culture where explainability is viewed as a business advantage rather than a compliance burden.

Resource and skill gaps

The technical expertise required to develop and implement XAI remains a barrier. Organizations need to invest in upskilling teams, hiring AI ethics specialists, and leveraging AI-specific infrastructure to bridge the gap between research-driven explainability and practical business applications.

Bridging the Gap

Overcoming these challenges requires structured AI strategies that embed explainability into AI design from the outset.

Businesses must align AI initiatives with clear governance policies, invest in interpretable AI models, and create cross-functional collaboration between data science and business teams.

By proactively addressing these barriers, organizations can scale AI with confidence, ensuring models remain explainable, ethical, and strategically aligned with business goals.

Best practices for adopting explainable AI in your business

1. Align XAI with business strategy

Clarify the strategic objectives behind adopting Explainable AI (XAI). Whether it’s improving decision-making, managing risk, or boosting customer trust, XAI should align with the overall business goals.

Establish a clear use case for explainability to guide your AI initiatives and ensure they deliver measurable impact across key business areas.

2. Invest in scalable, transparent AI technologies

Choose AI tools that balance performance with transparency. Prioritize scalable technologies that integrate seamlessly into existing systems while maintaining explainability.

This ensures that AI solutions can grow with your business, delivering both accuracy and accountability on a scale.

3. Foster cross-functional collaboration

XAI requires alignment across technical and business teams. Involve data scientists, compliance officers, and business leaders from the outset to ensure the AI models serve both operational and strategic needs.

This collaborative approach enables organizations to maintain transparency, meet regulatory requirements, and make data-driven decisions.

4. Prioritize continuous monitoring and adaptation

AI models evolve, and so should their explainability. Establish robust processes for ongoing monitoring and refinement. Continuously assess AI outputs to ensure they remain explainable and aligned with changing business objectives and regulatory standards.

This iterative approach helps maintain trust and ensures long-term success. By adhering to these best practices, businesses can drive effective AI adoption, fostering greater transparency and ensuring AI delivers sustainable value while mitigating risks.

How to choose the right explainable AI partner

Selecting the right Explainable AI (XAI) partner is pivotal to aligning AI capabilities with organizational goals, ensuring transparency, and mitigating risk. To make a sound decision, consider the following key factors.

1. Expertise and industry experience

Prioritize partners with a proven track record in AI transparency and deep industry expertise. Their understanding of regulatory, ethical, and operational dynamics will ensure that the integration of XAI aligns with both your immediate needs and long-term strategic vision.

2. Technology compatibility

Ensure that the XAI partner offers solutions that integrate seamlessly with your existing AI and IT infrastructure. This compatibility minimizes disruption, reduces time-to-value, and enables a more agile transition.

3. Customizability and scalability

As your business scales, so must your AI solutions. Select a partner that offers flexible, scalable XAI solutions capable of evolving with your enterprise, enabling future growth without incurring significant rework or investment.

4. Support and training

Beyond implementation, the right partner will provide ongoing training and support, empowering your teams to fully leverage the capabilities of XAI, optimize transparency, and refine decision-making processes.

5. Cost and ROI considerations

Evaluate not just the upfront costs but the long-term value. A strong XAI partner should provide a clear ROI roadmap, demonstrating how their solutions will enhance decision-making, reduce compliance risks, and ultimately contribute to bottom-line growth.

Embracing explainable AI for business success

As AI continues transforming industries, embracing Explainable AI (XAI) is crucial for building a sustainable, compliant, competitive business strategy.

Even if you’re starting to explore AI adoption, understanding the importance of transparency today will set a strong foundation for long-term success and ensure you stay ahead of evolving regulations.

The next step is integrating AI transparency into your existing business strategy. Whether you’re assessing AI’s potential or preparing for future regulations, learning from industry leaders’ XAI strategies will guide you on this journey.

AI is moving fast. Regulations are tightening. Can your business afford to fall behind?

Rapidops helps enterprises turn AI explainability into a competitive advantage. Let’s discuss how your company can stay compliant, build trust, and confidently scale AI.

Schedule a free consultation call with our AI and data experts to start building a strategy that ensures compliance, boosts confidence, and unlocks sustainable growth.

The future is here, and it’s explainable. Don’t let your competitors take the lead. Take action now to secure your company’s AI-driven success.

What’s Inside

- What is explainable AI?

- Key components of explainable AI

- Why explainable AI is crucial for business success

- Common misconceptions about explainable AI

- Real-world examples of explainable AI in action

- Challenges of implementing explainable AI

- Best practices for adopting explainable AI in your business

- How to choose the right explainable AI partner

- Embracing explainable AI for business success